Does ChatGPT Really Use Language Like You Do? The Answer Might Surprise You (Part III)

Three shared features between LLM and human linguistic systems, and their implications about language and cognition at large

In Part I of this five-part series on human versus LLM capabilities, I described some of the mechanisms underlying LLM language use.

In Part II, I presented some mechanisms underlying human language use.

Now, in this piece I will share three fundamental similarities between how LLMs process and produce language, and how humans do (as modeled by the exemplar frameworks I introduced last time) — and their implications about the nature of linguistic cognition, biological and artificial.

Considering that LLMs were not explicitly designed to mimic how humans internally engage with language (as evidenced by the dissimilarities we'll explore in Part IV), these parallels suggest to me that human-like linguistic behavior might arise from general-purpose learning systems — that is, perhaps certain strategies for (first) language acquisition and generation naturally emerge and operate effectively across both biological and artificial domains.

The Gist of the Three Similarities

LLM and human linguistic capabilities are similar in that they both:

What does that mean, and why does it matter?! Allow me to explain.

Similarity (1):

Syntactic Knowledge Without Trees or Grammatical Propositions

For ChatGPT and exemplar-modeled human language alike, knowledge of syntax emerges not from pre-defined sets of syntactic rules and tree structures, but from exposure to linguistic units.

ChatGPT relies, as described in Part I, on statistical information about linguistic frequency to generate grammatical agreement, in substitution for possessing formalized syntactic rules.

For instance, considering the following exchange:

(GPT’s perennially laid-back tone with me is a continuous source of amusement in my life).

In generating verbs like “offers,” ChatGPT is not, for example, relying on a syntax tree in its architecture which demands adherence to a grammatical rule.

(For those curious about the technicalities of what such a rule could look like, I will describe one in this paragraph, although these details are not central to the argument, so scroll ahead as you please: in a Chomskyan syntactic paradigm, the example sentence is a Tense Phrase (TP) whose [+Present] T head carries person‑ and number‑agreement features. The main verb — “offer” in “he offers” — is first generated lower in the structure inside a vP/VP. During the derivation, that verb must either raise to T or have its features merged with T so that the tense head’s agreement features can be checked against those of the subject “he,” which sits in Spec‑TP. Once this feature‑checking is satisfied, the grammar’s morphological component realizes the appropriate agreement marker: in this case, the third‑person singular present inflectional morpheme “‑s”).

By contrast, ChatGPT concludes on the basis of statistical co-occurrences in its training data that, when followed by whichever tokens comprise “Substack,” the tokens comprising “offer” will be distributionally most likely to be produced with an “s” (or a token containing “s”) in that context.

Put otherwise: the model is reflecting patterns in human language usage. Presumably, instances of “Substack offer” (situated relatively early on in a sentence — recall positional encoding, discussed in Part I) are few and far between in its training data compared to “Substack offers.”

Similarly, according to many exemplar-based models of human syntax, syntactic phenomena, including verb agreement, are not governed by generativist propositions which I described in Part II, and trees may be seen as descriptive tools rather than mental representations.

Instead, perceived linguistic inputs may activate stored syntactic exemplars, which then become readily available for retrieval during production.

For instance, when preparing to produce “Substack,” a speaker is more likely to retrieve the “offers” exemplar rather than the “offer” one, because “Substack” frequently co-occurs with linguistic units that include the “s” ending in third-person singular contexts.

To be clear, language is indisputably hierarchically structured — for example, in the sentence “ChatGPT said that Substack offers niche topics,” “Substack offers niche topics” is embedded and part of the larger clause, rather than a mere separate string of words. Further, in “what did ChatGPT say?,” “what” originates in the embedded clause but appears at the beginning of the question — requiring hierarchical understanding of sentence structure to interpret correctly.

Exemplar models account for this non-linear nature of language by assuming that stored utterances can include information about internal structure. With repeated exposure, speakers abstract these patterns, allowing hierarchy to emerge from experience, rather than from innate tree-based rules.

Similarity (2):

Computed Vector-Space Similarity Between Linguistic Items Across Dimensions

Within both ChatGPT and exemplar-modeled human language processing, linguistic units can be classified according to their similarity to other linguistic data in terms of semantic, phonological, and other attributes.

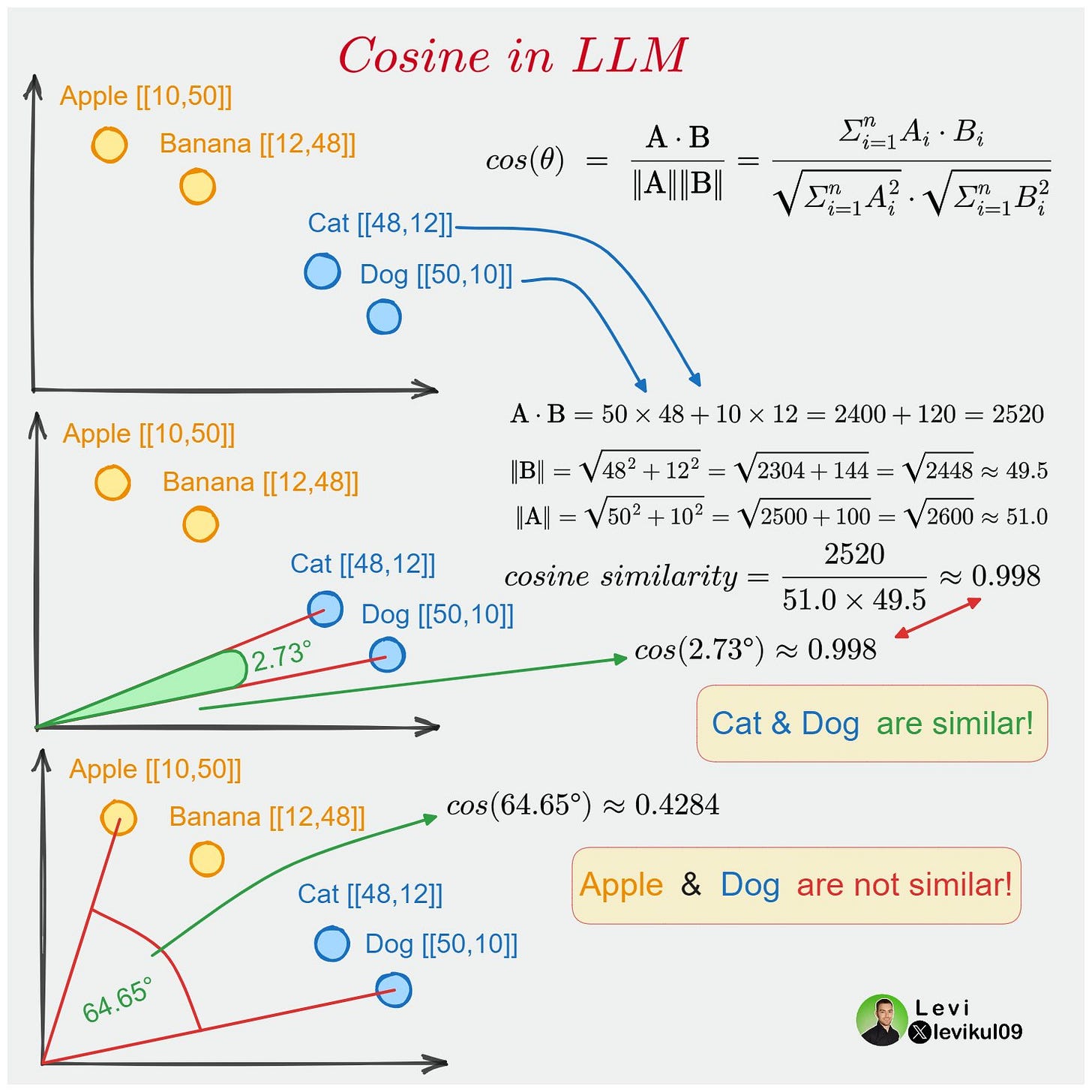

Cosine similarity or other distance metrics (for example, Euclidean distance) can be used to measure the “closeness” of two word embeddings in the GPT vector space across semantic, phonological, and other features less routinely attended to by humans (for instance, whether or not two expressions contain twenty-four characters each — recall the discussion in Part I on GPT’s uninterpretable embeddings).

Likewise, in exemplar-based models describing human language capacities, linguistic similarity between exemplars can be computed as “distance” between existing exemplars in a given category.

Various studies also suggest that the vector space of LLMs’ shallow word embedding models capture context-dependent knowledge which correlates significantly with human ratings of conceptual relationships and their quantified distances from one another.

Similarity (3):

Rejection of Innate Grammatical Abstractions (and a Challenge to Chomsky)

According to versions of both frameworks, linguistic knowledge emerges from aggregate, usage-based experience rather than from an intrinsic possession of abstract grammatical categories such as [NOUN] and [VERB].

For that reason, both architectures forgo nativist assumptions about the importance, and in fact very existence, of innate knowledge in first language acquisition.

As mentioned in Part II, according to the famous nativist “poverty of the stimulus” argument, the linguistic input that children receive is insufficient for developing a wide range of generalizations about language structure — unless they possess innate linguistic knowledge, including “abstractions.”

One such famous innate “abstraction” posited by nativists is the monomorphemic word as an intrinsic concept: for example, “table” as a representation of the category which corresponds to what every instance of the word “table” describes.

The idea is that this category allegedly accelerates children’s acquisition of words; learned “tables” will land within the confines of an abstract “table” grouping, which is then subsumed by [NOUN] and becomes subject to language-specific propositions such as “[ADJ] + [NOUN]” (e.g., you say “blue table” and not “table blue” in English).

However, the suggestion that humans are born with abstractions of lexical items faces issues because it is, once again, not possible to define this abstract category in a way that would include everything — and only everything — that a “table” could (and couldn’t!) be.

Indeed, as Ambridge (2020) explains, “any conceivable rules for defining a table (e.g. ‘has legs’; ‘used for eating’; ‘made of wood, metal or plastic’; ‘waist height’) can be easily dismissed with counterexample.”

Is a dog a table, seeing that it lends itself to the upward support of objects? (As a dog devotee, I would certainly hope not!). Conversely, could a dollhouse table not be qualified as one, on account of its size?

To the exemplar theorist, however, these concerns dissolve, as each utterance of “table” — whether in reference to a McDonald’s booth or toy furniture — is stored in the “table” exemplar cloud by dint of its observed phonetic and contextual properties.

(E.g., if it sounds like “table,” it represents a “table.” How humans learn phonetics and language in general given the extraordinary amount of variability in human speech — for instance, my pronunciation of “table” is not acoustically identical to my father’s — is an equally astounding phenomenon).

The innate abstraction of nouns also faces issues in empirical findings that, when asked to categorize stimuli into prototypical categories, participants show preference for placing items that they have already encountered into these prototypical categories.

This problem is, again, circumvented by exemplar-based models, where each instance of a “table” utterance contributes to an aggregated, flexible “table” cloud.

This cloud is activated based on the cumulative weight or frequency of these exemplars — the more stored, the stronger the cloud’s “activation.”

Comparable to exemplar-modeled human language, ChatGPT is not programmed with preset nativist abstractions like [NOUN], and yet can still (manifestly) learn and generate human language with marked success.

This acquisition of first language sans pre-programmed grammatical categories has been argued to undercut “virtually every strong claim for the innateness of language.”

Nativists are quick to refute this claim by pointing out that ChatGPT requires orders of magnitude more linguistic input than human children do to form semblances of grammatical competence:

However, Warstadt et al. 2023’s BabyLM undertaking suggests otherwise: neither innate human-like language knowledge nor gargantuan amounts of training data may be necessary for building linguistic competence.

BabyLM examines language models trained on amounts of input received by human children on a standard syntactic benchmark. It found that some of these models outperformed those trained on trillions of words (and even came within 3% of human linguistic performance!).

This result comports with other findings that transformer-based models can, perhaps unexpectedly, acquire understanding of syntax (including complex phenomena called island effects) equally well whether trained on the amounts of data to which children are exposed, or on much larger datasets.

While these findings are preliminary according to Millière & Buckner (2024), it remains evident that ChatGPT acquires linguistic competence without appealing to abstract grammatical representations such as [NOUN] – undermining the purported generativist “necessity” of innate linguistic category representations.

But Wait — About Abstraction! I’m Keeping My Eyes Peeled for This Future Finding:

An interesting point may be submitted that, while ChatGPT is not “preprogrammed” with abstract understandings like [NOUN] and sentence order, nativist categories could be emergent from its proclivity for detecting statistical co-occurrences in language.

These epiphenomenal, acquired abstractions, the argument may go, expedite ChatGPT’s ability to learn, categorize, and generate linguistic expressions.

However, I have yet to see that thesis supplied with evidence (though it would be very cool if someday correct).

Recap: Three Similarities

I have shared three ways in which ChatGPT’s language production and processing resembles our own:

(1) both systems develop syntactic competence without relying on tree structures or grammatical rules,

(2) both organize linguistic items in a multidimensional vector space based on similarity, and

(3) both acquire language without innate grammatical categories, challenging nativist assumptions like Universal Grammar.

Implications re: AI and Cognitive Science

LLMs were not intentionally built to replicate human cognitive processes (scores of dissimilarities separate the two, some of which we will discuss next time). Thus I draw a few conclusions from the three similarities above:

Human-like linguistic behavior might emerge from general-purpose learning systems.

In other words, a few certain strategies for learning and generating language from raw input may be especially effective, whether the learner is carbon- or silicon-based. Granted, AI systems aren’t (currently) alive in a biological sense, but a useful lens for thinking about this may be that of convergent evolution.

Different architectures may well arrive at similar solutions when tasked with solving similar challenges — such as, in this case, tracking long-distance dependencies, segmenting continuous input into meaningful units, and resolving ambiguity — especially when operating under comparable constraints like highly variable and noisy language input, generally limited access to negative evidence, and finite processing/memory capacity.

Distantly or non-related species can converge on similar adaptable features, according to Wiki

(What appears to be) linguistic competence doesn’t necessarily require human-specific cognitive mechanisms — which, prima facie, challenges theories about how the language faculty is what distinguishes homo sapiens from all else.

(However, I am very prepared to accept that there is something unique to how humans hierarchically parse through language and represent symbols symbolically, or that language distinguishes us in some respect from other biological organisms — much more can be said on this later).

The similarities do not resolve the question of whether or not LLMs are “really” using language or merely imitating it. Drawing from the philosophy of Wittgenstein, some have argued that LLMs do not interact with the practical, lived experiences or shared forms of life (Lebensformen) that underpin human language and therefore cannot “participate fully in the language game of truth.” Indeed, a whole host of literature concerns grounding, or the connection of LLM linguistic expressions to real-world referents; our jury is out.

And many more questions await answers: do LLMs understand what they’re saying? Do they exhibit intent? More to the point, in what ways are their language capacities different from our own!?

These topics will surface in the next post and final in this series: Part IV!

Your analysis is so thought provoking

One of the questions it begs, and you may be preparing to tell us about this in due course,

is the dumbfounding complexity efficiency and parsimony of the human brain as a computing machine--how does this little computer composed of a couple of pounds of meat, and relying upon a tiny amount of energy, manage to do everything it seems to be capable of?